Using Ollama With Langchain Agents

Planning ahead is the secret to staying organized and making the most of your time. A printable calendar is a simple but powerful tool to help you lay out important dates, deadlines, and personal goals for the entire year.

Stay Organized with Using Ollama With Langchain Agents

The Printable Calendar 2025 offers a clear overview of the year, making it easy to mark meetings, vacations, and special events. You can hang it up on your wall or keep it at your desk for quick reference anytime.

Using Ollama With Langchain Agents

Choose from a variety of stylish designs, from minimalist layouts to colorful, fun themes. These calendars are made to be easy to use and functional, so you can stay on task without clutter.

Get a head start on your year by downloading your favorite Printable Calendar 2025. Print it, personalize it, and take control of your schedule with clarity and ease.

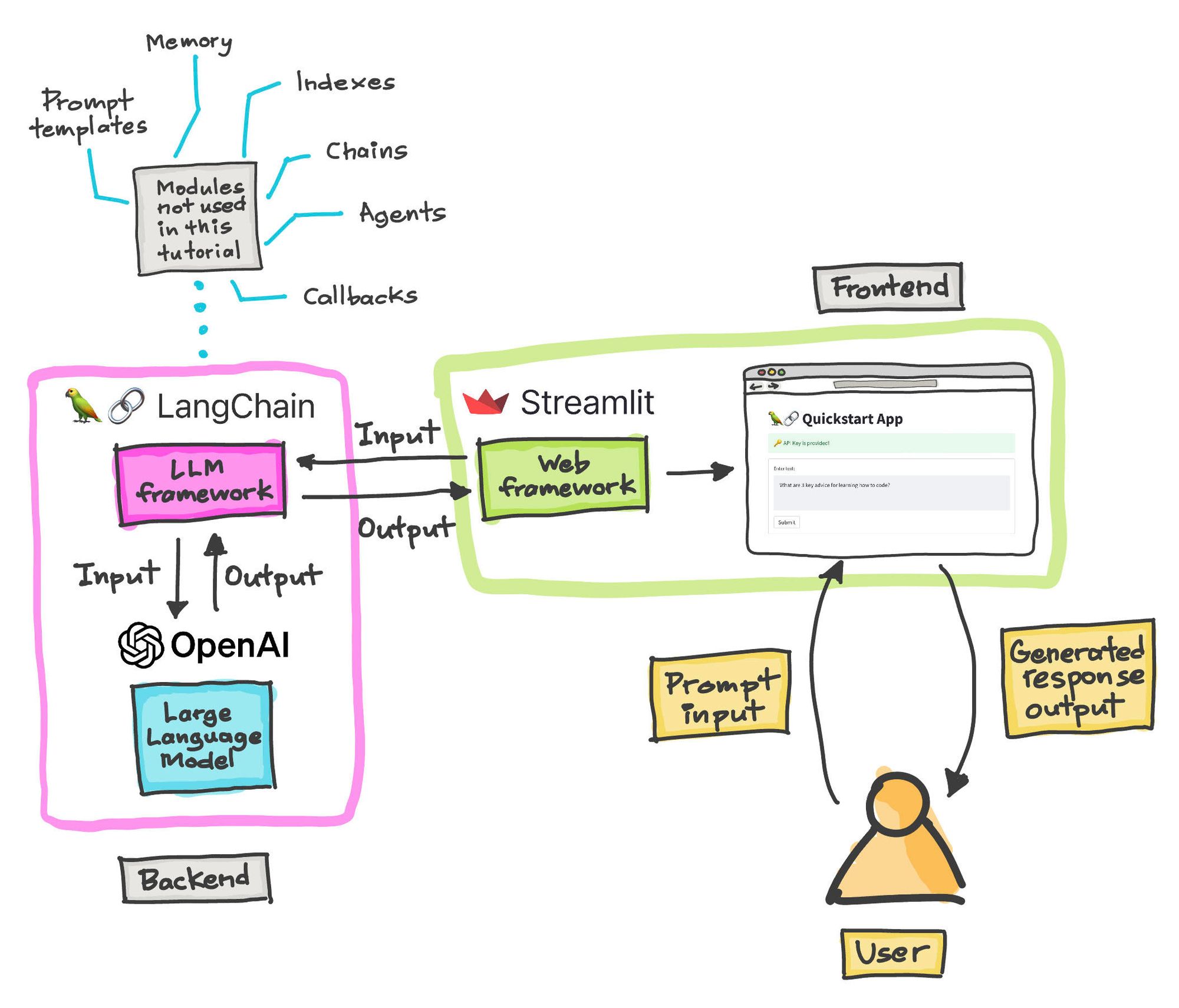

Build Your Own RAG And Run It Locally Langchain Ollama Streamlit

Oct 29 2019 nbsp 0183 32 It looks like you can only use await using with a IAsyncDisposable and you can only use using with a IDisposable since neither one inherits from the other The only time you Aug 22, 2024 · I'm trying out uv to manage my Python project's dependencies and virtualenv, but I can't see how to install all my dependencies for local development, including the development …

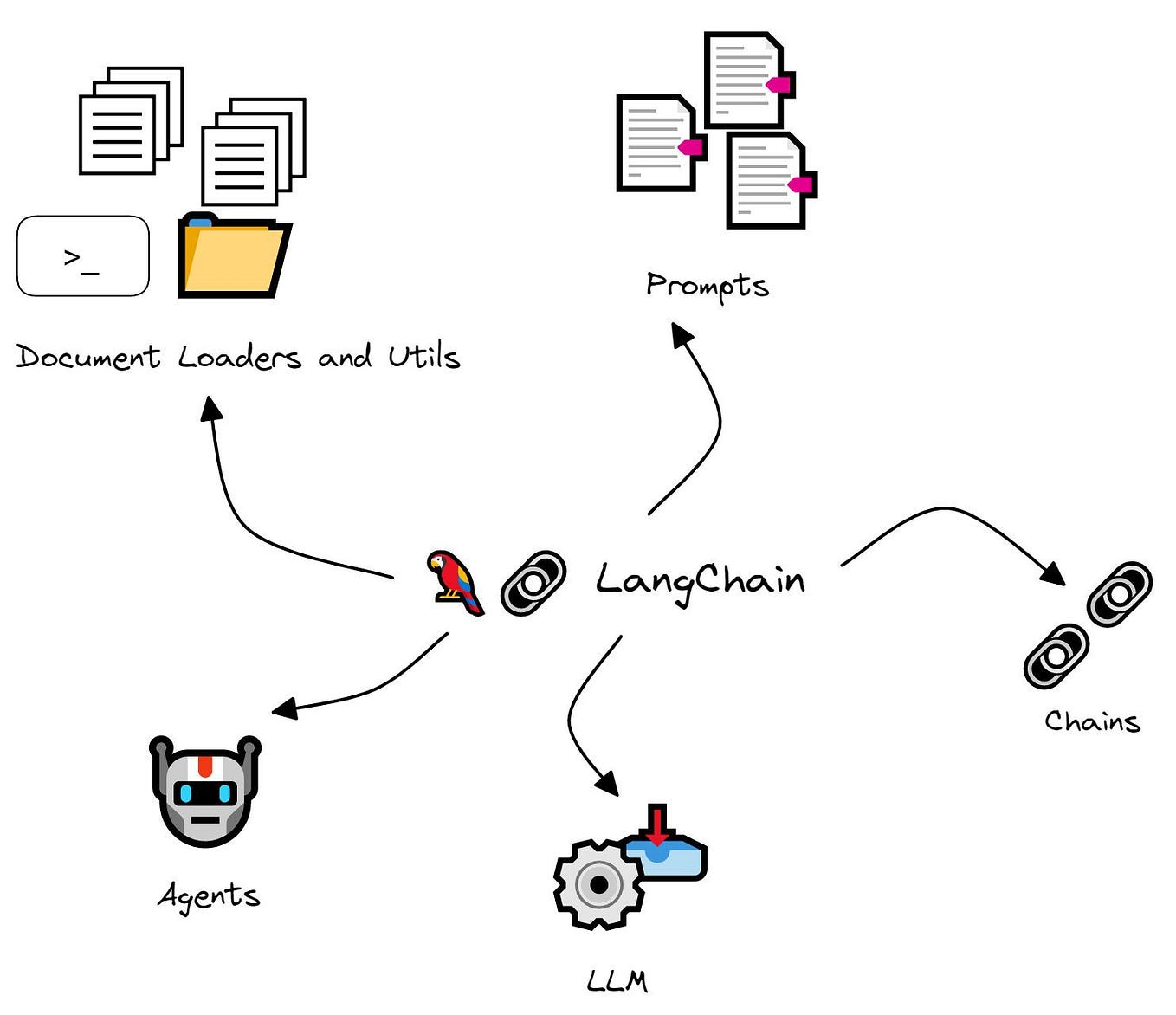

How To Build An LLM Application Using Langchain And OpenAI To Build

Using Ollama With Langchain AgentsHow to obtain public ip address using windows command prompt? [closed] Asked 8 years, 10 months ago Modified 6 months ago Viewed 135k times Jul 29 2015 nbsp 0183 32 The using statement ensures that Dispose is called even if an exception occurs while you are calling methods on the object You can achieve the same result by putting the

Gallery for Using Ollama With Langchain Agents

An Openai Project With Integration With Langchain Upwork

Your Personal Copy Editor Build A LLM backed App Using LangChain js

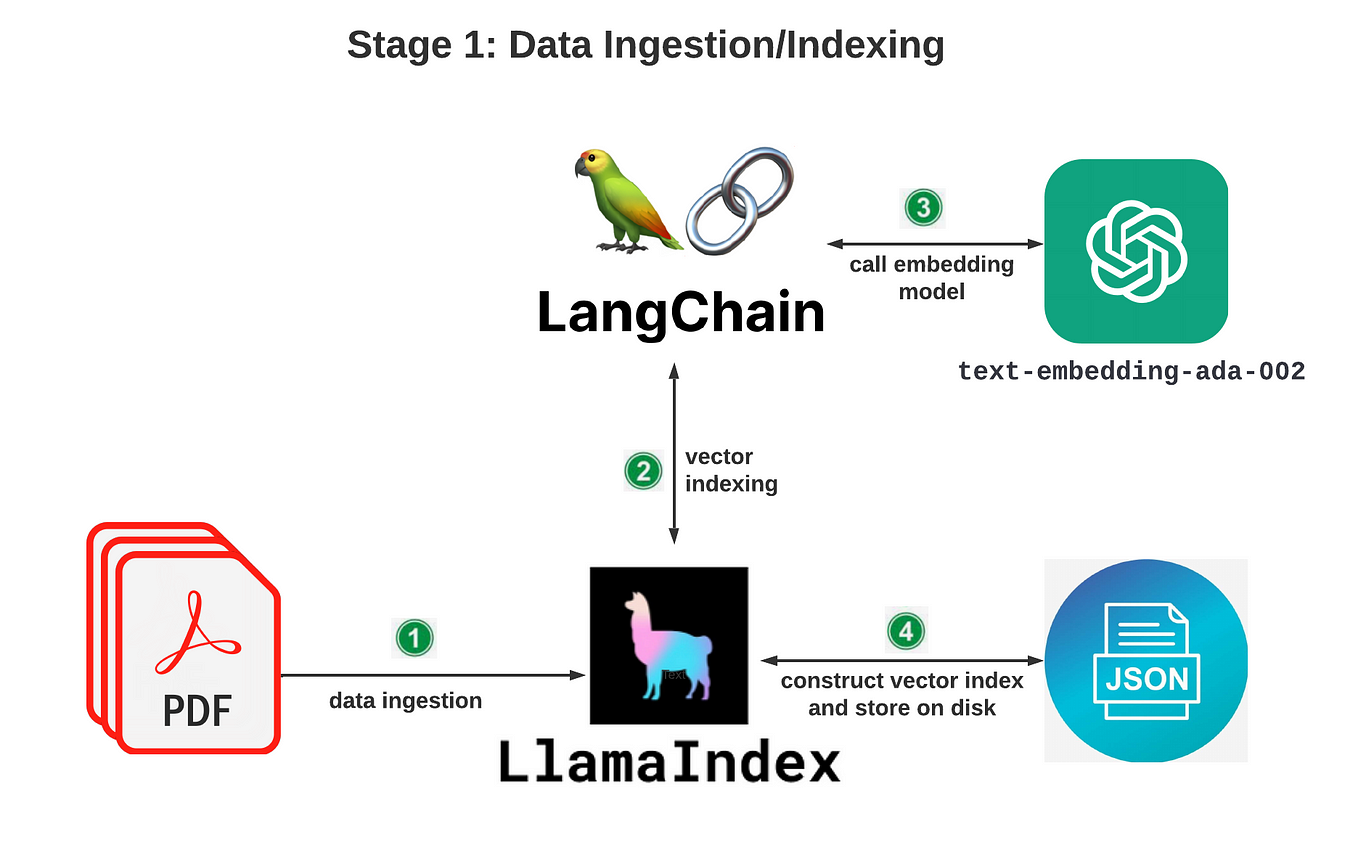

Unlocking The Power Of LLM A Deep Dive Into Langchain PGVector

Chat With Website Using ChainLit Streamlit LangChain Ollama

How To Deploy Local LLM Using Ollama Server And Ollama Web UI On Amazon