Using Ollama With Cursor Library

Planning ahead is the secret to staying organized and making the most of your time. A printable calendar is a simple but effective tool to help you lay out important dates, deadlines, and personal goals for the entire year.

Stay Organized with Using Ollama With Cursor Library

The Printable Calendar 2025 offers a clean overview of the year, making it easy to mark meetings, vacations, and special events. You can hang it up on your wall or keep it at your desk for quick reference anytime.

Using Ollama With Cursor Library

Choose from a variety of modern designs, from minimalist layouts to colorful, fun themes. These calendars are made to be user-friendly and functional, so you can focus on planning without distraction.

Get a head start on your year by downloading your favorite Printable Calendar 2025. Print it, customize it, and take control of your schedule with confidence and ease.

How To Run LLMs Locally With WEB UI Like ChatGPT Ollama Web UI

Dec 27 2013 nbsp 0183 32 239 What is the logic behind the quot using quot keyword in C It is used in different situations and I am trying to find if all those have something in common and there is a reason why the quot using quot keyword is used as such The using statement allows the programmer to specify when objects that use resources should release them. The object provided to the using statement must implement the IDisposable interface. This interface provides the Dispose method, …

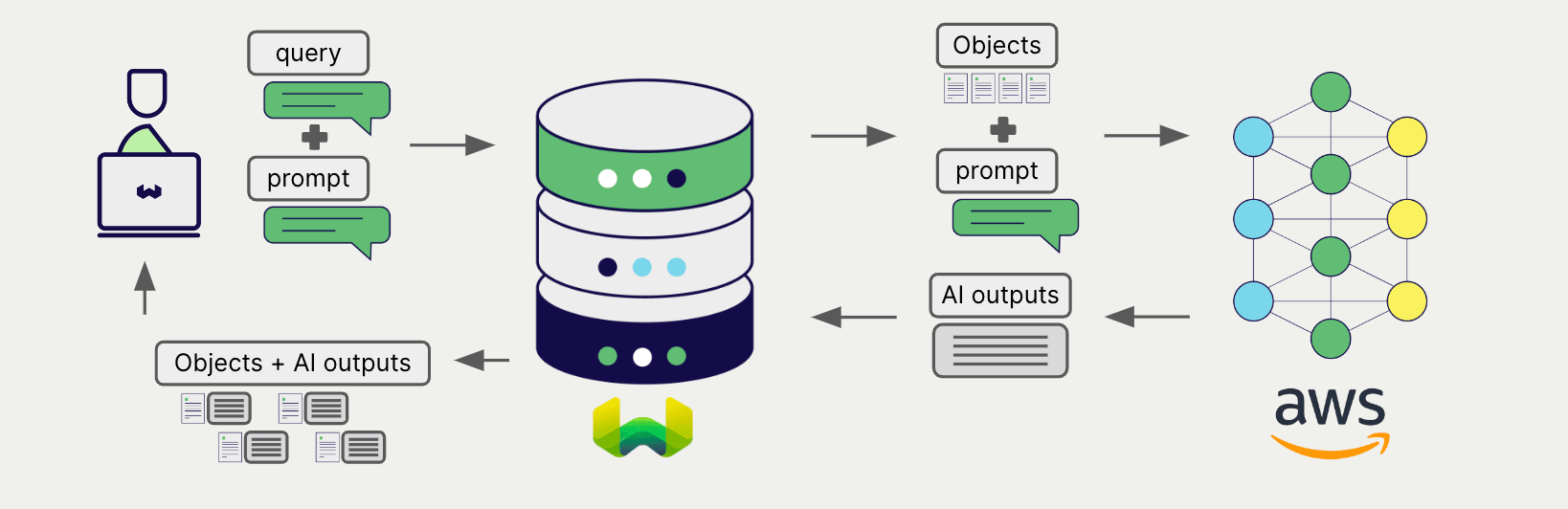

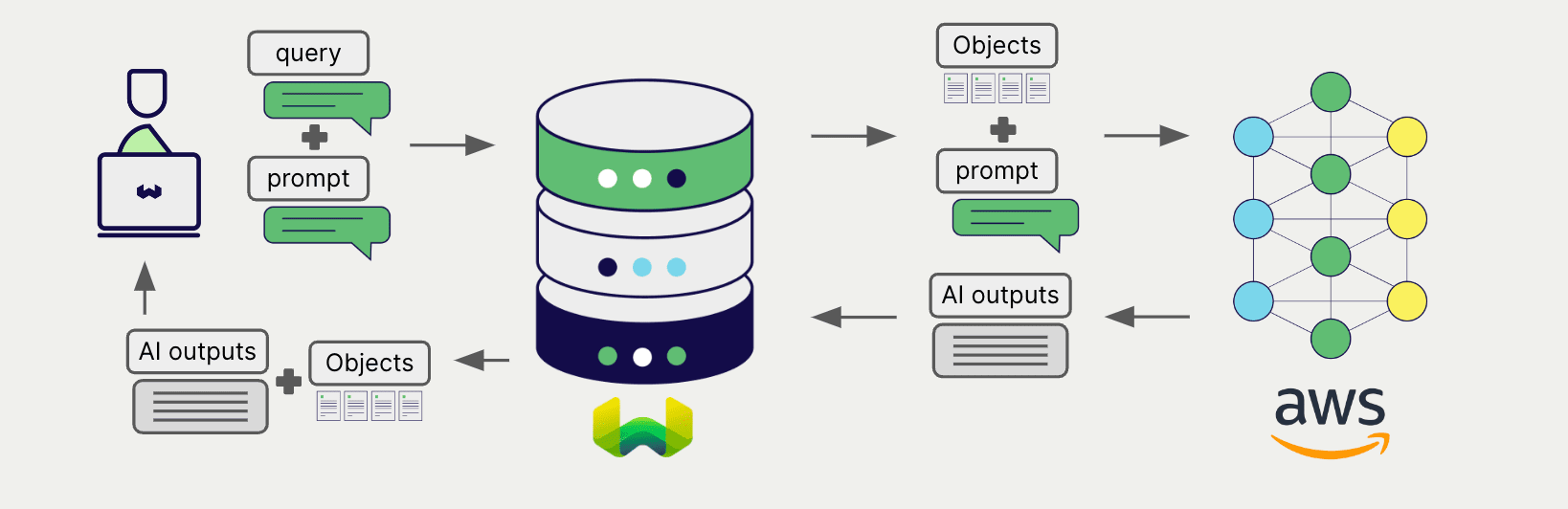

AWS Weaviate Weaviate

Using Ollama With Cursor LibraryUsing statements have nothing to do with Exceptions. Using blocks just insure that Dispose is called on the object in the using block, when it exits that block. using namespace include namespace using namespace std C

Gallery for Using Ollama With Cursor Library

Qwen 1 5 Small LLM Massive Results

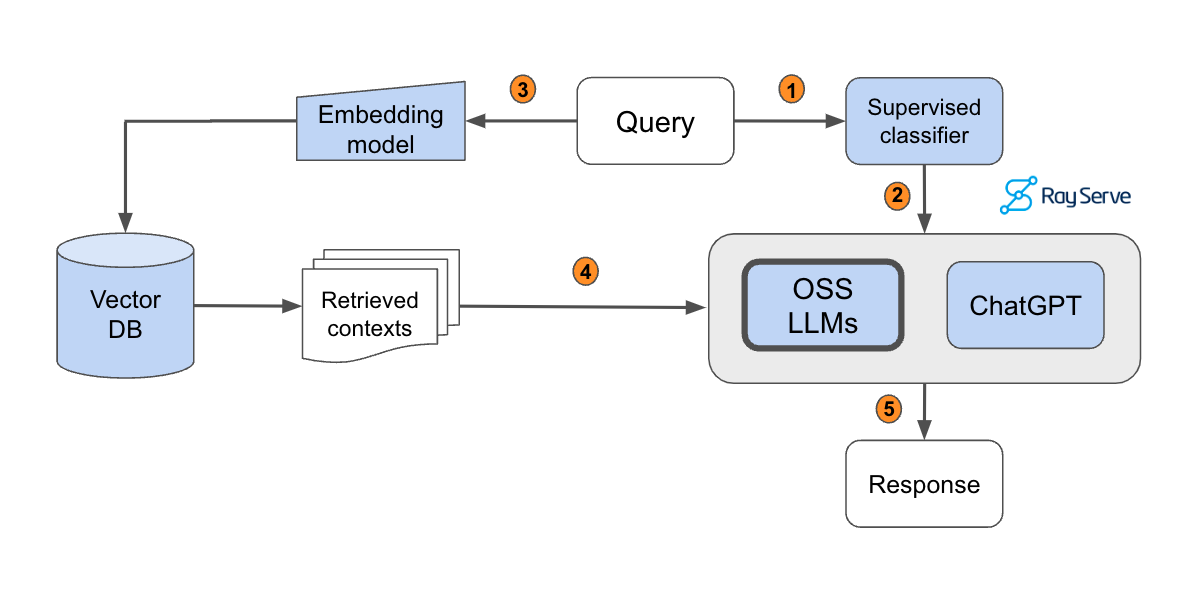

LlamaIndex Qdrant Ollama FastAPI RAG Api fastapi ericliu2017 AI

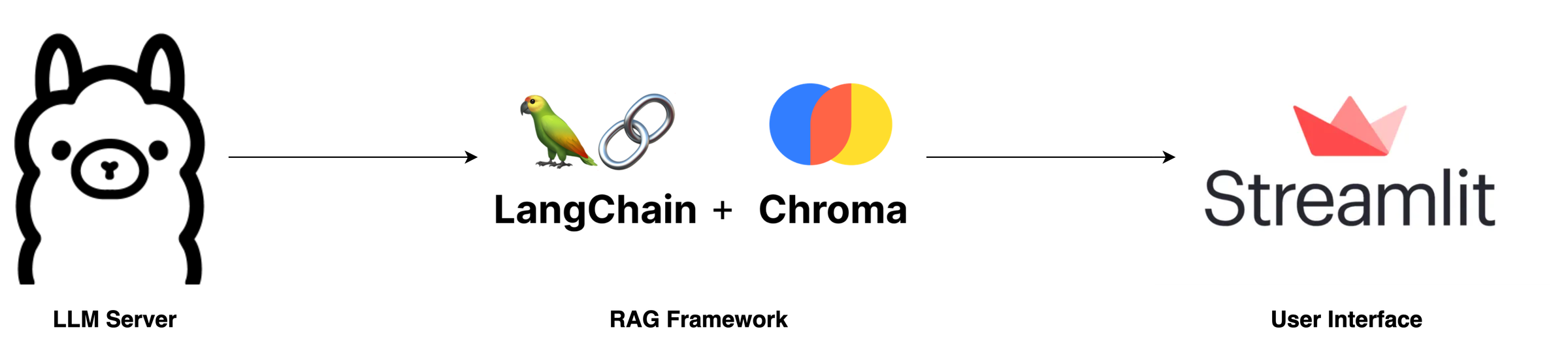

Build Your Own RAG And Run It Locally Langchain Ollama Streamlit

Build Your Own RAG And Run It Locally Langchain Ollama Streamlit

Mlc Llm Deploying Large Language Models For Native Device Performance

Generative AI Weaviate

Build Your Own RAG And Run It Locally Langchain Ollama Streamlit

The Local Llm Stack You Should Deploy Ollama Supabase Langchain And

Generating Images With Stable Diffusion And OnnxStream On The Raspberry

Quick Start Guide Ollama s New Python Library By Jake Nolan Medium