Pytorch Model Parallel

Planning ahead is the key to staying organized and making the most of your time. A printable calendar is a simple but effective tool to help you lay out important dates, deadlines, and personal goals for the entire year.

Stay Organized with Pytorch Model Parallel

The Printable Calendar 2025 offers a clean overview of the year, making it easy to mark meetings, vacations, and special events. You can hang it up on your wall or keep it at your desk for quick reference anytime.

Pytorch Model Parallel

Choose from a range of modern designs, from minimalist layouts to colorful, fun themes. These calendars are made to be user-friendly and functional, so you can focus on planning without clutter.

Get a head start on your year by downloading your favorite Printable Calendar 2025. Print it, customize it, and take control of your schedule with clarity and ease.

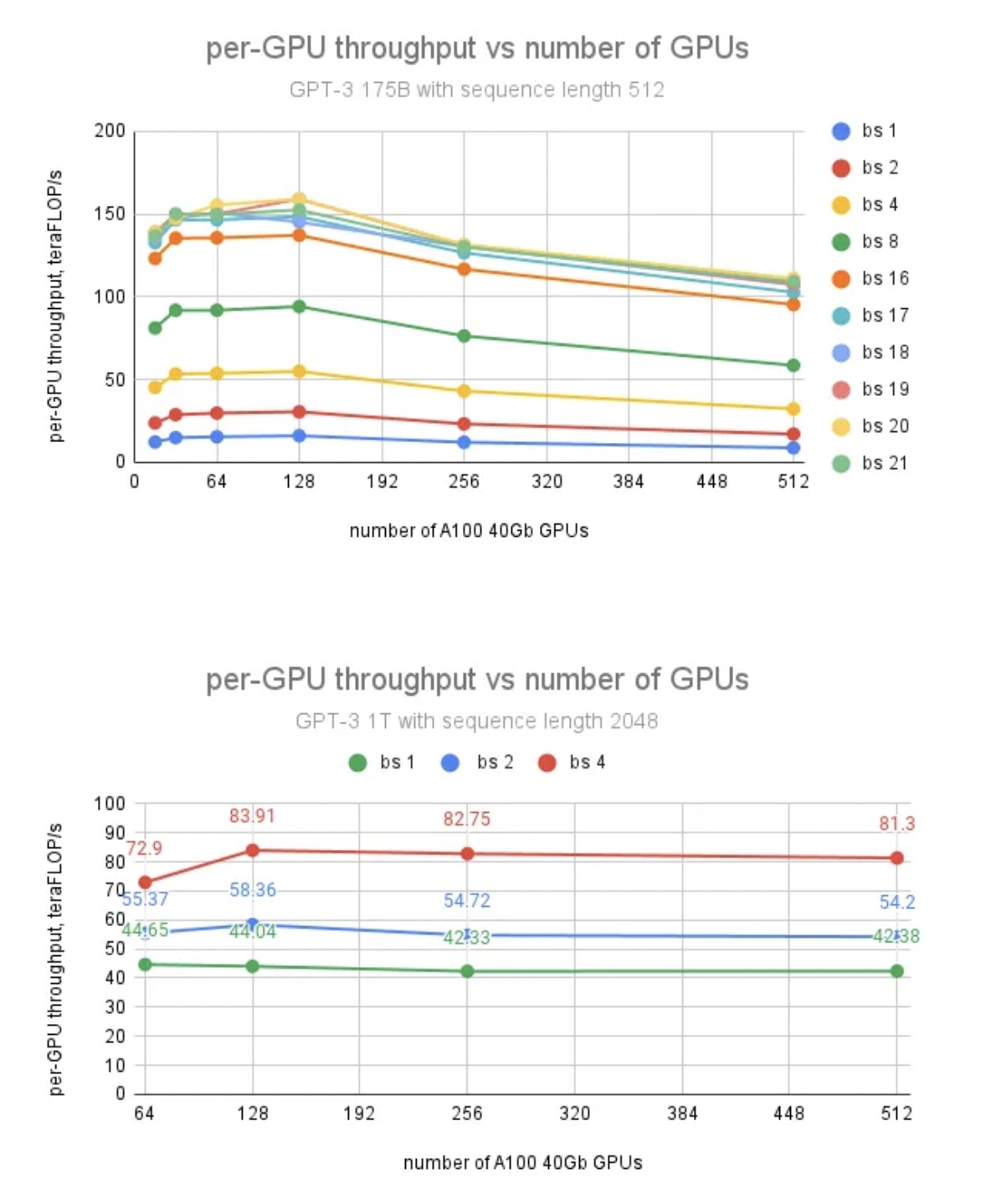

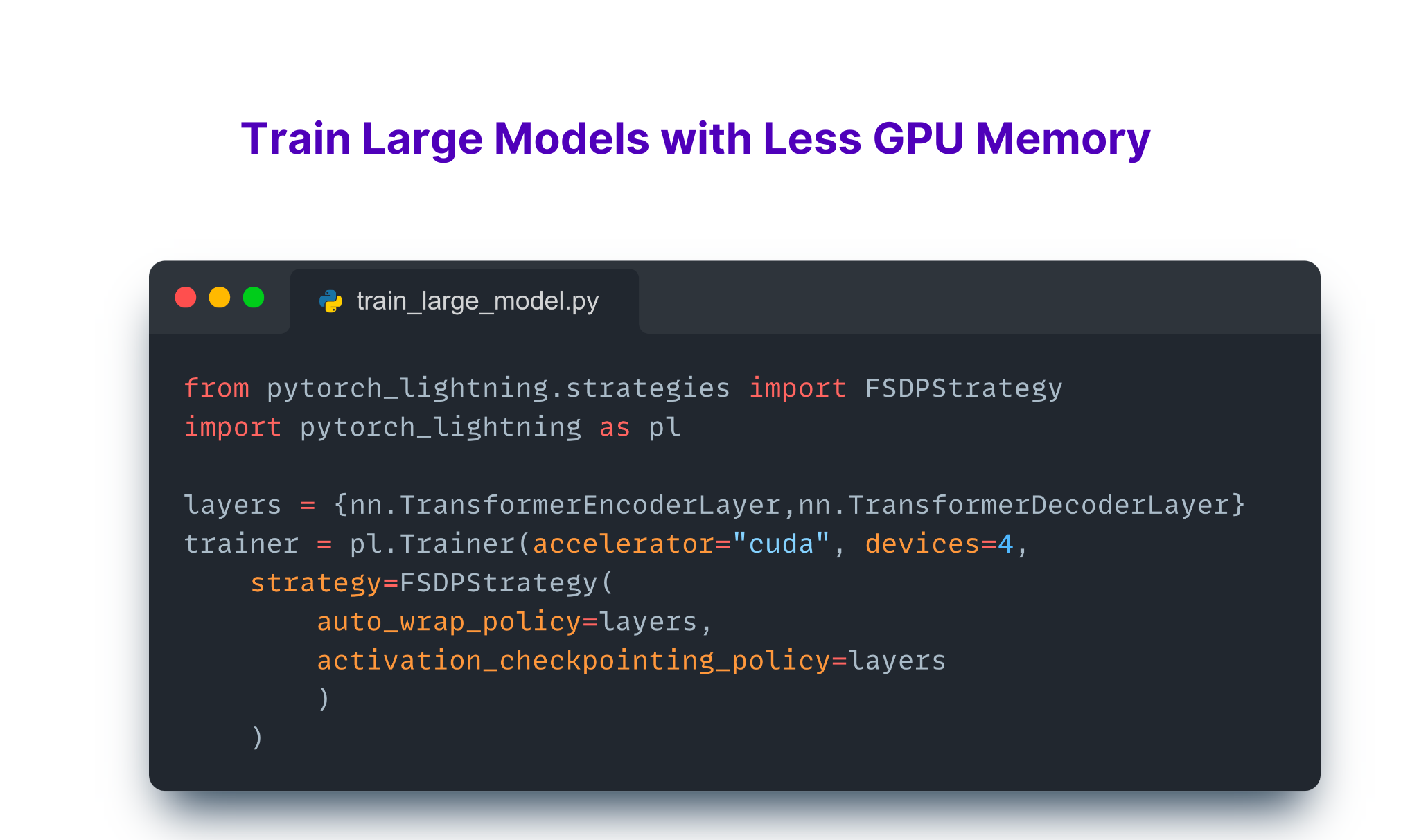

PyTorch FSDP Fully Sharded Data Parallel MLTalks

For the majority of PyTorch users installing from a pre built binary via a package manager will provide the best experience However there are times when you may want to install the Apr 23, 2025 · We are excited to announce the release of PyTorch® 2.7 (release notes)! This release features: support for the NVIDIA Blackwell GPU architecture and pre-built wheels for …

SegmentFault

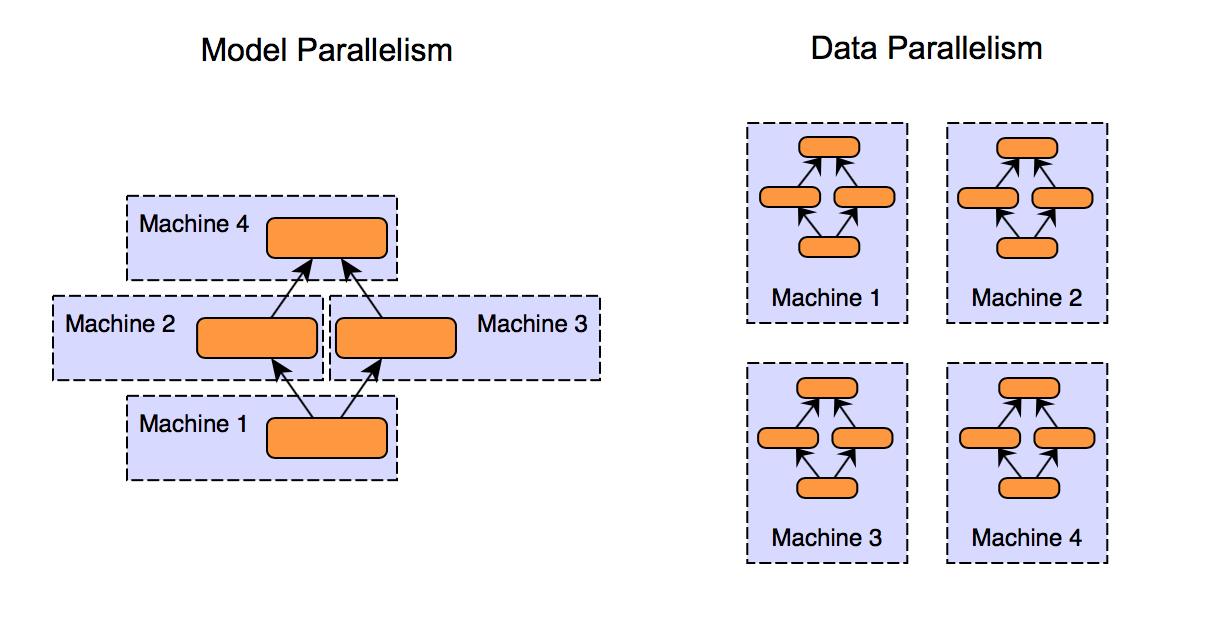

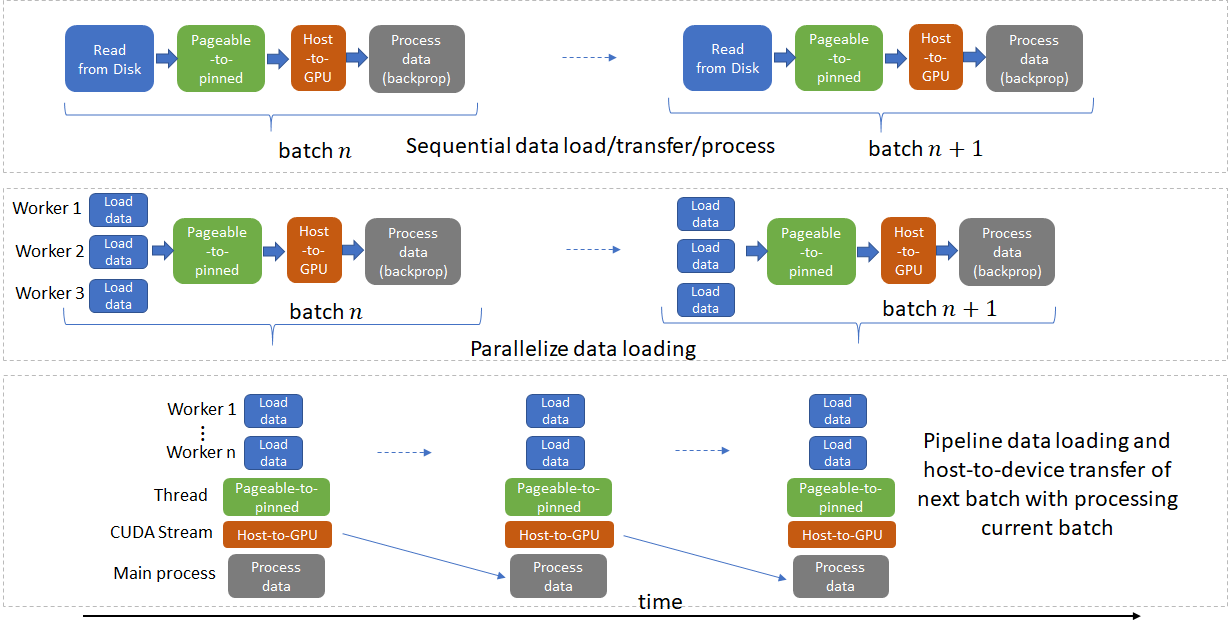

Pytorch Model ParallelMost machine learning workflows involve working with data, creating models, optimizing model parameters, and saving the trained models. This tutorial introduces you to a complete ML … PyTorch documentation PyTorch is an optimized tensor library for deep learning using GPUs and CPUs Features described in this documentation are classified by release status Stable

Gallery for Pytorch Model Parallel

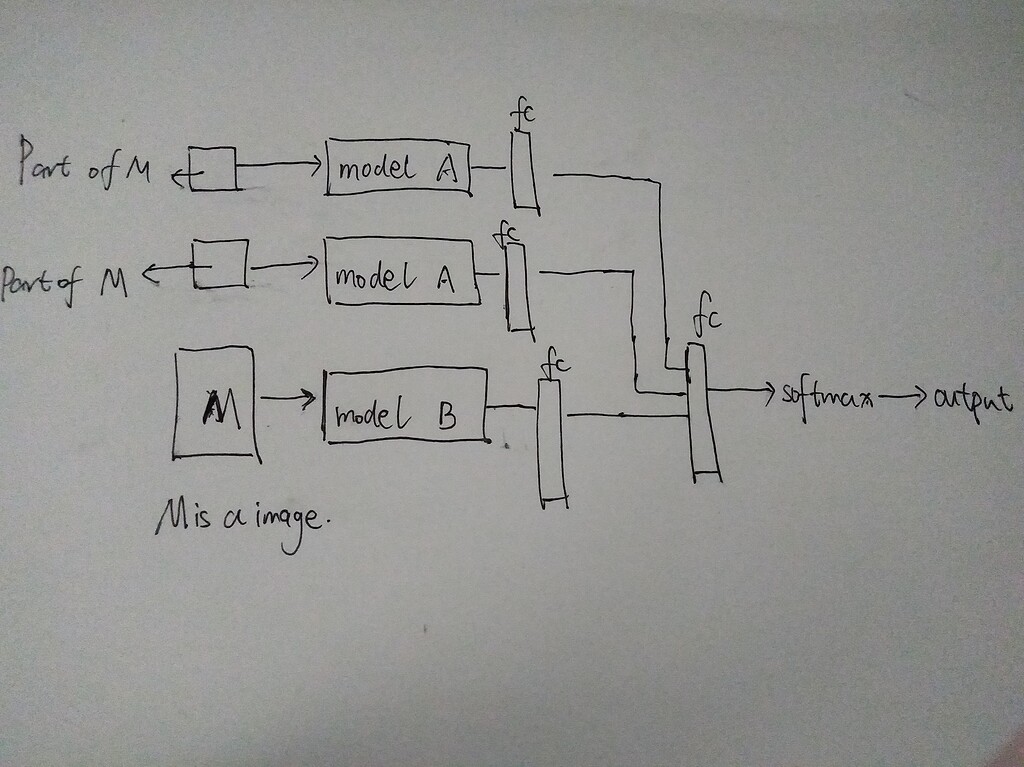

How To Parallel Multiple Models PyTorch Forums

SegmentFault

PyTorch

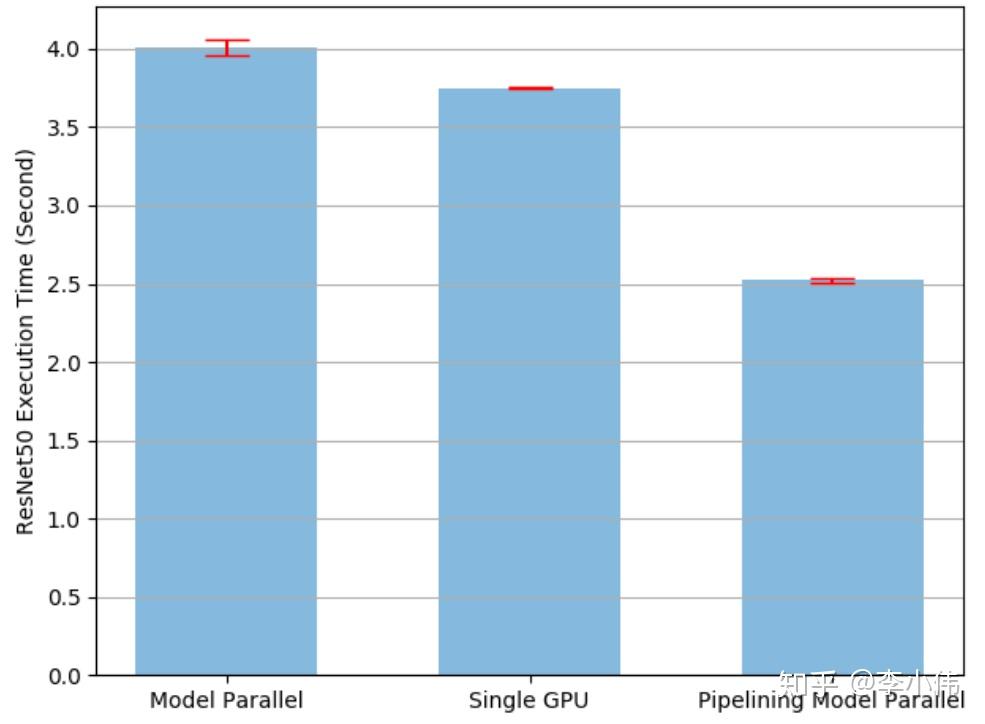

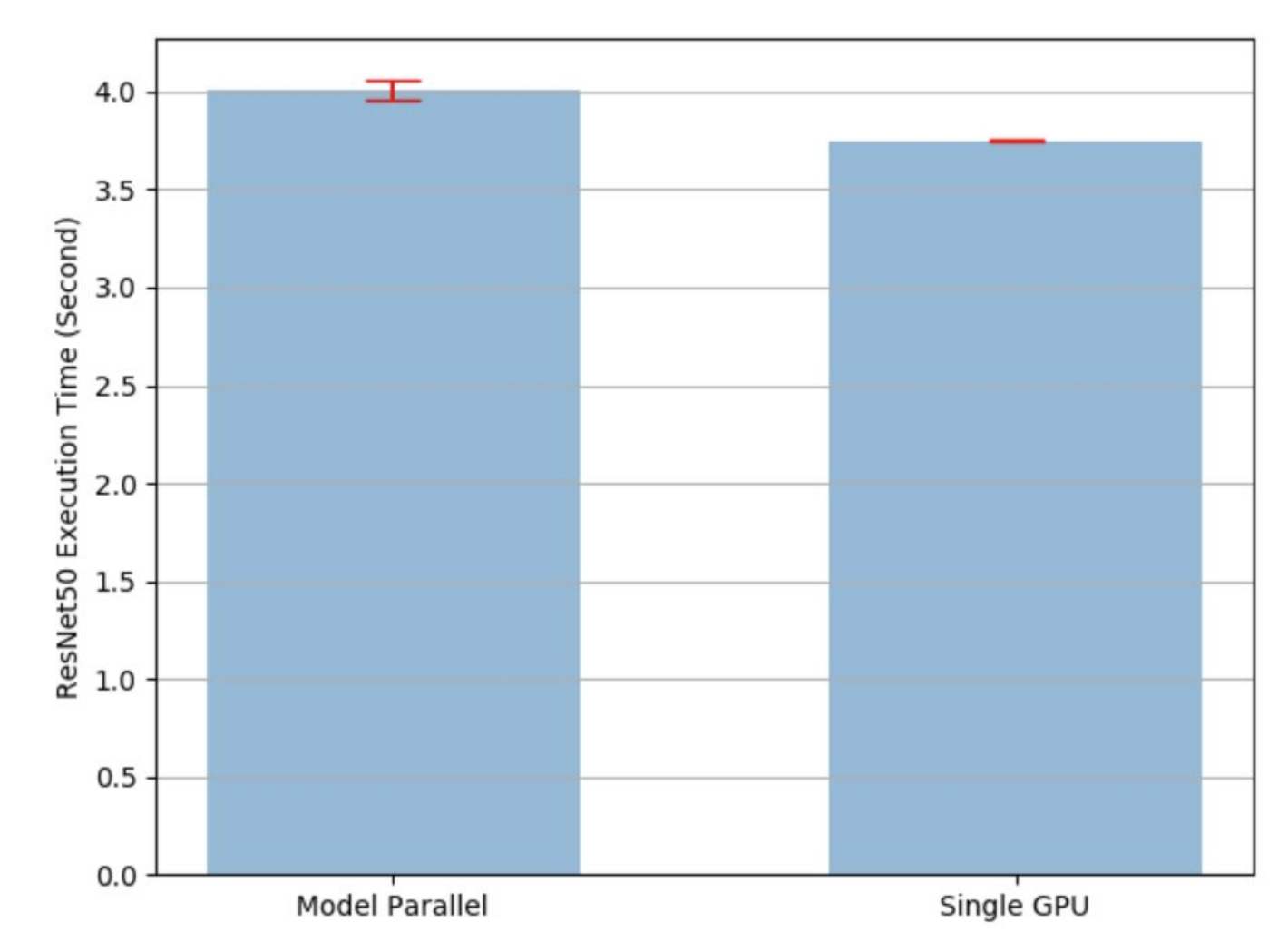

PyTorch 81 Model Parallel

GitHub Bindog pytorch model parallel A Memory Balanced And

Pytorch Lightning Model Zoo Image To U

Math Model Making TLM For Exhibitions Parallel Lines With Traversals

Pytorch Training Multi Gpu Image To U

PyTorch Model Parallel

Fine tuning 20B LLMs With RLHF On A 24GB Consumer GPU