Pyspark Write To Single Csv

Planning ahead is the secret to staying organized and making the most of your time. A printable calendar is a simple but powerful tool to help you map out important dates, deadlines, and personal goals for the entire year.

Stay Organized with Pyspark Write To Single Csv

The Printable Calendar 2025 offers a clear overview of the year, making it easy to mark appointments, vacations, and special events. You can pin it on your wall or keep it at your desk for quick reference anytime.

Pyspark Write To Single Csv

Choose from a range of stylish designs, from minimalist layouts to colorful, fun themes. These calendars are made to be user-friendly and functional, so you can focus on planning without distraction.

Get a head start on your year by grabbing your favorite Printable Calendar 2025. Print it, customize it, and take control of your schedule with confidence and ease.

08 Combine Multiple Parquet Files Into A Single Dataframe PySpark

Mar 23 2018 nbsp 0183 32 I have a dataframe with 1000 columns I need to save this dataframe as txt file not as csv with no header mode should be quot append quot used below command which is not working df coalesce 1 write spark.conf.set("spark.sql.execution.arrow.pyspark.enabled", "true") For more details you can refer to my blog post Speeding up the conversion between PySpark and Pandas DataFrames Share

Create DataFrame From CSV File In PySpark 3 0 On Colab Part 3 Data

Pyspark Write To Single CsvI'm trying to run PySpark on my MacBook Air. When I try starting it up, I get the error: Exception: Java gateway process exited before sending the driver its port number when sc = SparkContext() is Jun 28 2018 nbsp 0183 32 As suggested by pault the data field is a string field since the keys are the same i e key1 key2 in the JSON string over rows you might also use json tuple this function is New in version 1 6 based on the documentation

Gallery for Pyspark Write To Single Csv

How To Write A MapReduce Function In Databricks Using PySpark Data

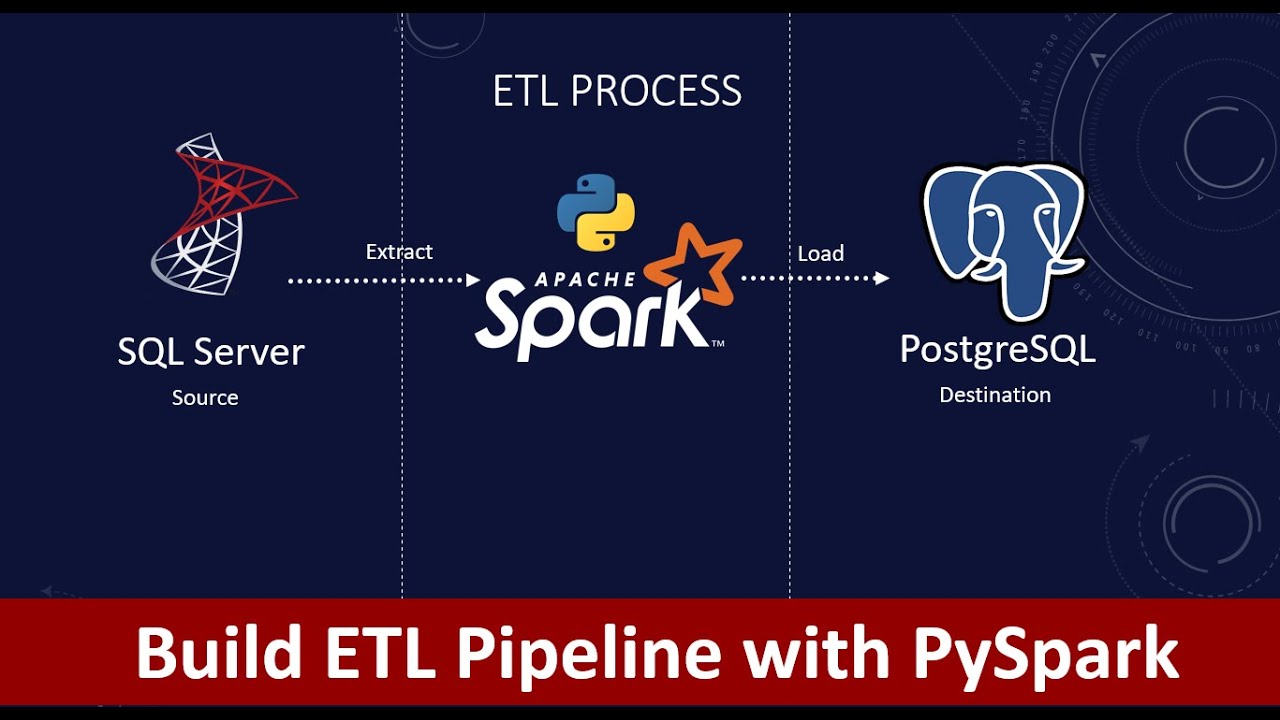

How To Build ETL Pipelines With PySpark Build ETL Pipelines On

6 How To Write Dataframe As Single File With Specific Name In PySpark

Read CSV File With Header And Schema From DBFS PySpark Databricks

30 The BigData File Formats Read CSV In PySpark Databricks Tutorial

How To Use PySpark And Spark SQL MatPlotLib And Seaborn In Azure

How To Use Every Single Data Format With Spark Using CSV XML JSON

Pyspark Dataframe Write To Single Json File With Specific Name YouTube

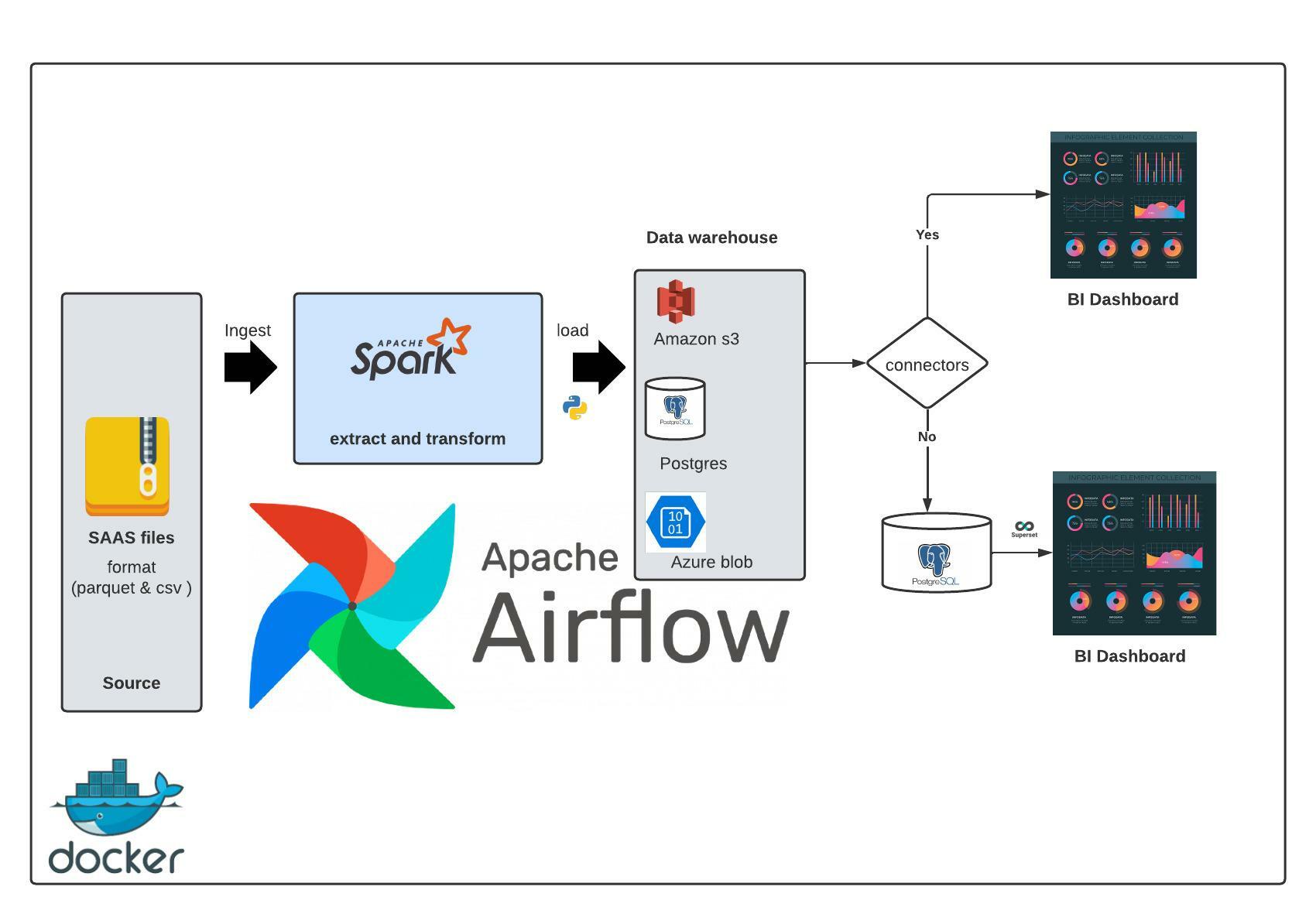

Prescriber ETL data pipeline Showing End to End Implementation Using

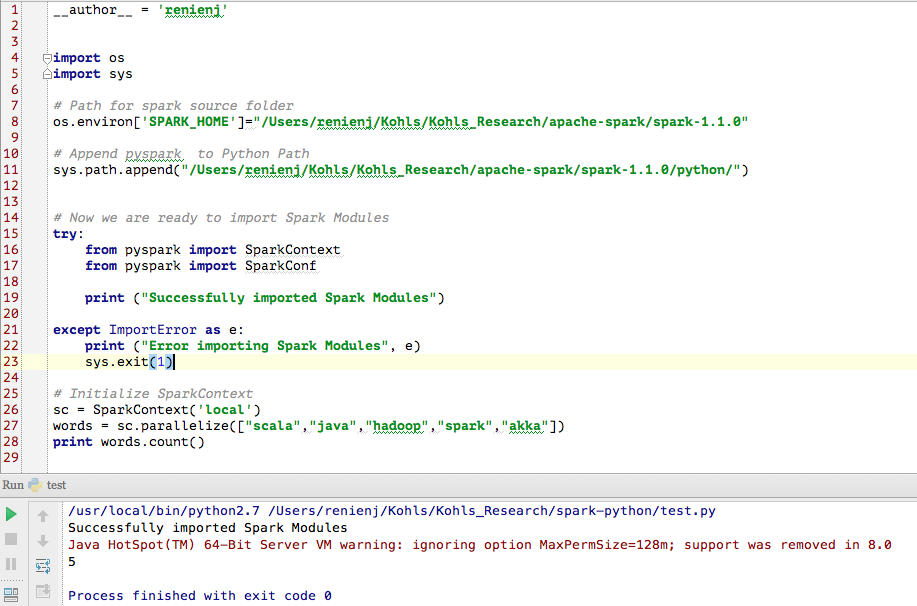

Accessing PySpark In PyCharm Renien John Joseph