Pyspark Dataframe Apply

Planning ahead is the secret to staying organized and making the most of your time. A printable calendar is a straightforward but powerful tool to help you lay out important dates, deadlines, and personal goals for the entire year.

Stay Organized with Pyspark Dataframe Apply

The Printable Calendar 2025 offers a clean overview of the year, making it easy to mark appointments, vacations, and special events. You can hang it up on your wall or keep it at your desk for quick reference anytime.

Pyspark Dataframe Apply

Choose from a range of stylish designs, from minimalist layouts to colorful, fun themes. These calendars are made to be user-friendly and functional, so you can focus on planning without clutter.

Get a head start on your year by downloading your favorite Printable Calendar 2025. Print it, customize it, and take control of your schedule with confidence and ease.

PYTHON Apply StringIndexer To Several Columns In A PySpark Dataframe

Aug 22 2017 nbsp 0183 32 I have a dataset consisting of a timestamp column and a dollars column I would like to find the average number of dollars per week ending at the timestamp of each row I was I have a pyspark dataframe consisting of one column, called json, where each row is a unicode string of json. I'd like to parse each row and return a new dataframe where each row is the …

4 Different Ways To Apply Function On Column In Dataframe Using

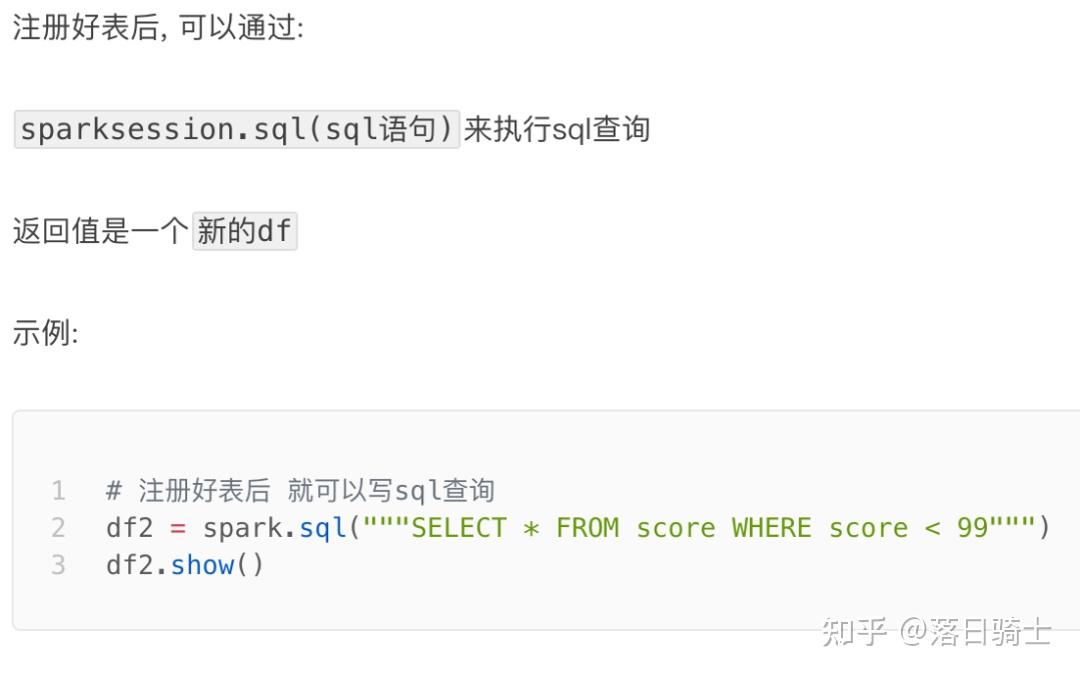

Pyspark Dataframe Applypython apache-spark pyspark apache-spark-sql edited Dec 10, 2017 at 1:43 Community Bot 1 1 I m trying to run PySpark on my MacBook Air When I try starting it up I get the error Exception Java gateway process exited before sending the driver its port number when sc

Gallery for Pyspark Dataframe Apply

How To Use PySpark DataFrame API DataFrame Operations On Spark YouTube

How To Apply Filter And Sort Dataframe In Pyspark Pyspark Tutorial

PySpark SQL Left Outer Join With Example Spark By 53 OFF

Mukovhe Mukwevho Medium

PySpark UDF

PySpark DataFrame

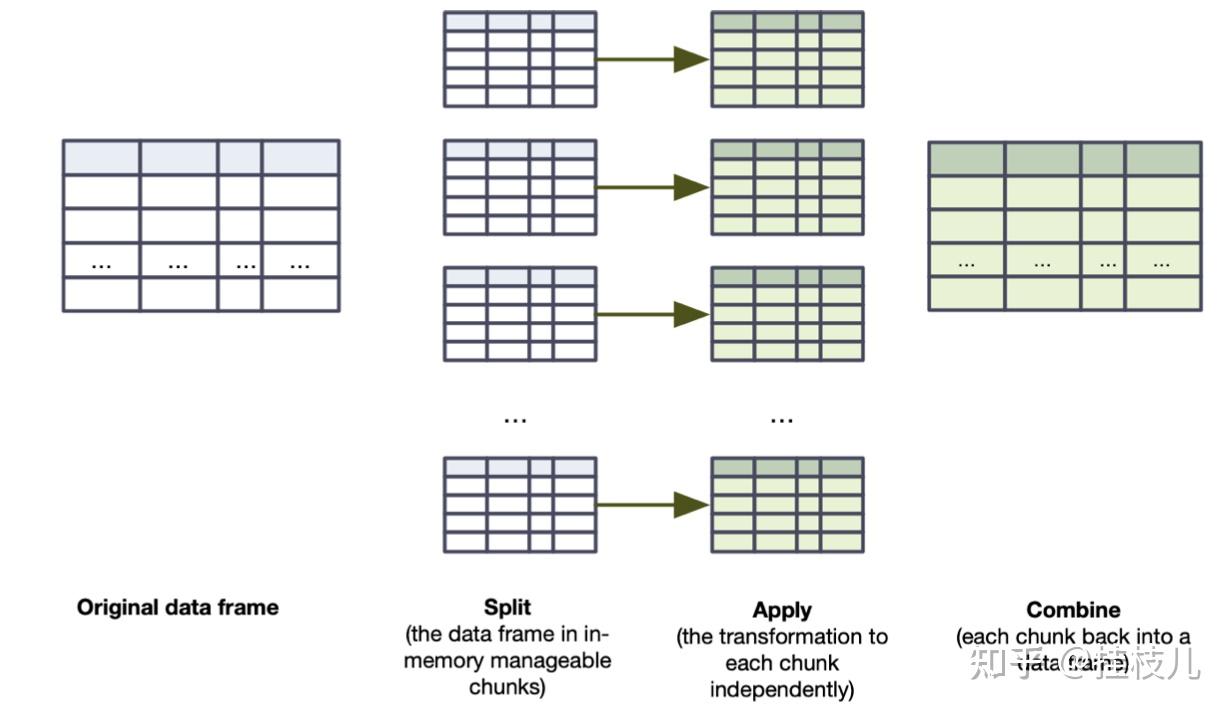

Optimizing Pandas

Pandas apply Series apply DataFrame apply

Pandas

PySpark Group A DataFrame And Apply Aggregations